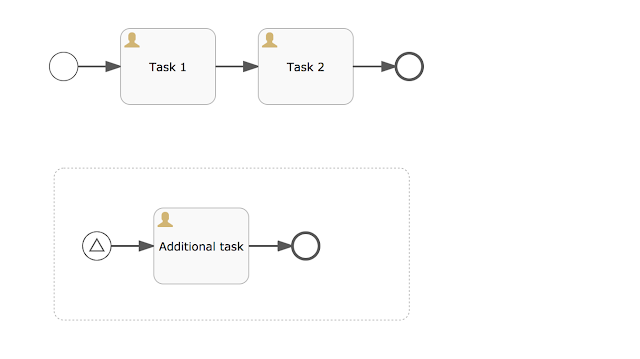

When a process instance is started, "Task 1" will be the active state of the process instance. When the signal start event of the event sub process is triggered, "Task 1" will be terminated and the event sub process is started and the current state of the process instance is "Additional task".

With Flowable 6 there's now also support for non-interrupting event sub processes. The only difference when designing the process definition is configuring the signal start event as not interrupting.

A non-interrupting start event is visually shown as a circle with a dashed line. If you would start a process instance for this process definition, again "Task 1" will be the active state of the process instance. But now, when the signal start event is triggered, "Task 1" will remain active, and an additional execution is created for the event sub process. Therefore, two user tasks will be active "Task 1" and "Additional task".

Let's look at another example, that contains two event sub processes, one on the main process level and one nested inside a sub process.

When we deploy this process definition (as part of an app definition) to the Flowable Task application, we can test the process instance execution by clicking through the task application. Let's start a new process instance in the Flowable Task application and see "Task 1" being created. If you now query the REST API for active event subscriptions (on Tomcat with http://localhost:8080/flowable-task/process-api/runtime/event-subscriptions), you'll see the signal event from the event sub process on the main process level being available.

We could trigger the signal start event, but let's complete "Task 1" first. Now "Sub task 1" is the active task and if you do another event subscription query, you'll see another event subscription has become active. Let's trigger the nested event sub process signal and validate if the non-interrupting behaviour works as expected.

With a REST client like Postman you can do a PUT request to http://localhost:8080/flowable-task/process-api/runtime/executions/{executionId}, with a JSON body defining the signal action and the signal event name.

In this example, the execution id is "12518". But you can look up the execution id in the event subscription query result. When this signal event is triggered, the "Additional sub task" of the event sub process should be created, while keeping the "Sub task 1" task active as well. The process diagram in the Flowable task application should look like this:

Now let's complete the "Sub task 1" task and notice that "Task 2" is not created yet. First we have to complete "Additional sub task". When both tasks have been completed, "Task 2" is created. When executing the event subscription query again, you'll see that there's only the main process level signal event remaining. The nested sub process event subscription is deleted and not available anymore. After "Task 2" is completed, the process instance is also completed and no event subscriptions are available anymore.

Non-interrupting event sub processes provide a great addition to add more flexibility to your process definitions and to be able to create additional user tasks, or execute additional service tasks, in specific use cases. With Flowable 6.0.0, non-interrupting event sub processes on the main process level are support, but with the upcoming 6.0.1 release also nested non-interrupting event sub processes will be supported in the Flowable Engine.